The hosts discuss the progress of the July Google Core Update, how to handle permanent redirects over the long-haul, the SEO benefits or not of dedicated hosting, additions to the review options in Google My Business, and more.

Noteworthy links from this episode:

- WordPress 5.8 Released With Nearly 300 New Features & Fixes

- Akamai Outage Pulls Major Sites Offline Globally

- July Core Update is Effectively Complete

- Google will pass permanent signals with a redirect after a year

- Users can now leave more detailed restaurant reviews on Google

- Joy Hawkins Test: despite recent rumors, nothing has changed and geo-tagging images still has no impact on rankings

Transcription of Episode 411

Ross: Hello, and welcome to SEO 101 on WMR.fm. episode number 411. This is Ross Dunn, CEO of Stepforth Web Marketing and my co-host is my company’s Senior SEO, Scott Van Achte. I guess we’re going to be delivering the 411 on SEO today.

Scott: It’s funny you say that because we have this call before we start recording, and I was gonna mention, “we should say, you’re here for the 411” and I didn’t even bring it up and you went there anyway.

Ross: I went there anyway, not quite as planned.

Scott: No, no. He got there.

Ross: I’m not known for eloquence. Anywho, a lot’s going on. You and I have been just run off our feet these days, business and learning and summer. It’s a good life. We’re actually getting away from the office occasionally. Right?

Scott: It has been good. I’ve been working from home for ages. It’s not new due to COVID but I gotta say, today on my lunch break, I went out and I did some gardening. I sat and I just picked and stuffed my face with blueberries for a bunch, like 10 minutes or so, typical summer stuff. It’s hard to be in a bad mood with good weather in the summer. Yeah, it’s been good.

Ross: Agreed. Well, there is a lot to share today. The first one, we’ll start off with our other news. They are always something that impacts the world of the internet and in this case, probably more SEO than anything. It’s the latest update to WordPress and this is a big one, long time in coming. Three hundred new features and fixes.

Scott: There are three or four people that are not going to be happy about that.

Ross: Exactly. I wish it was only three or four. Most government places are going to have a problem with that, probably. Have you noticed? Ask the people about the computers when you’re at any government location. They’re ancient.

Scott: For sure.

Ross: Scary.

Anywho, they’ve enhanced their blocks system for building. It’s funny, no one ever mentioned Gutenberg here but as far as I know, that’s what it is. We don’t actually use them, we use Divi but as far as I know, the block-based design system is called Gutenberg. Again, it’s not noted in this article by Roger Montti, but it’s pretty interesting. One thing I particularly loved about this list of things that changed is that, when you go to a post, you can actually, with a press of a button, go to a bit of a template design section and alter that particular page instead of applying to all templates. Pretty cool.

Scott: That’s gonna be a really cool feature for a lot of people.

Ross: Yeah, because I’ve kind of always wished, “Oh, I wish this page is laid out a little better for this particular article. I don’t have time to create another template, you know, it’s just there’s no time” but this is pretty slick. Assuming, it’s easy to work with and somewhat flexible. Again, not my area but if it’s anything like Divi, I’m sure it’s going to be pretty amazing, hopefully even better.

What else is going on here, pattern transformations tool, a feature that suggests block patterns. I have no idea what that means. Social icon blocks, query blocks, I guess is how they’re laid out. One thing that is kind of neat is duotone filters. If you ever take photos and use any of those apps on your phone to apply Pantone or black and white or old style, all that kind of thing. Well, it’s not going to be quite, I don’t think, as organized as that. You can apply your own filters over images and over videos to add a different style and create a bit of an aura over the page, for lack of better words here, but a feel for it, which is a little different and unique. There are much more complex things also. One that stood out for me was the addition of support for WebP.

Scott: I saw that.

Ross: WebP is a new generation image format. It’s weird, I don’t know how many systems have said, “Here you can use a WebP image here and upload this” but nothing supported it so why would I?

Scott: Yeah, good note.

Ross: Anyway, it is now supporting it. WebP has the potential to be quite revolutionary in terms of lowering the file size of images and making them easier to load. I don’t know all the detail about that, but I’m sure there’s a lot of material online about it.

Scott: If there isn’t already, I’m sure there will be a plugin to do a mass conversion of everything to WebP on your WordPress site, at a click of a button. I’m sure that will exist soon if it doesn’t already exist out there.

Ross: Yeah, I suppose so. Maybe the image optimization tools will have that built in. Actually, I know one of them does. Which one is the one we use all the time? You look at it more often than I.

Scott: I’m not sure, there are a few. I know we were using EWWW for a while there. There was a crack-in with that image. I can’t remember the names, I don’t do a lot on that end of things but certainly I do it from time to time, I can’t think of a name.

Ross: Anyways, I know there’s one that you can click a button and it will switch them to WebP but, obviously, I hadn’t done it because for good reason.

Anyway, he doesn’t even touch, he barely scratches the surface of how many things have been fixed but he does cover the main stuff in this article on Search Engine Journal. So if you want to learn more, I’m sure you can go to the WordPress site and scour the update. It’s going to be pretty interesting. I would wait a little bit. Yes, they test them but we’ve had issues before upgrading. It’s pretty hard to downgrade and who knows what kind of issues that’s going to cause. You’re also going to want to make sure that the theme that you’re using, that your site was designed with, is going to support it. The myriad of questions that could come up with that are just sort of numerous. I have no idea if this is going to have any impact on any theme or if it’s going to shift for all of them. That’s sort of the way things work with WordPress updates. Sometimes it’s seamless.

Scott: It’s such a gamble. We’ve definitely seen Divi sites completely break as a result of WordPress updates and then we’ve seen the opposite where Divi updates break because it’s the wrong version of WordPress. I guess the key here is backup before you update.

Ross: Always. You always have to. Always have the ability just to press a button again to restore everything back to the way it was so you’re not going to be in trouble; or even better, if you have the ability to, install it in a Sandbox then see what it looks like, make sure everything’s working. Essentially, what that means is you create a .dev version of your domain, so dev.Stepforth.com. This is blocked to the public. It’s got a copy of your website, and then you can update WordPress within that particular hidden installation and see how it affects the site. That’s the safest way to go. If it works, most systems like WP Engine, where we host, will allow you to just press another button, which will then push that live to the main website. It’s amazing how much stuff you can do.

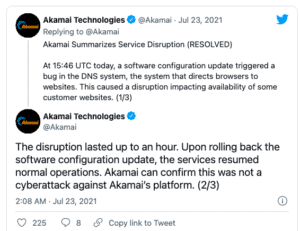

Anyway, let’s jump now into another piece here. I thought this was pretty crazy. Akamai. Akamai is one of the most popular content delivery networks in the world. They had an outage today that pulled major sites offline, globally.

Scott: That’s an expensive bug, I’m sure.

Ross: Yeah. No doubt about it.

Scott: It’s kind of frightening how easily you can take down our digital infrastructure. One link goes down for an hour and now people can’t do their banking. They can’t do their…whatever else they were doing. You know, the gamers can’t game. Whatever.

Ross: Airbnb went down.

Scott: Airbnb was on the list? That’s big.

Ross: It’s huge. Well, yeah. Why don’t you jump to the next piece of news? I’ll just find the list here and I’ll rattle them off after you’ve done that one.

Scott: Okay, sure. We’re moving on into SEO news now. As of last week, July 12 actually, so a little more than a week ago, the July core update has been announced as effectively complete. You should not be seeing any more fluctuation. If you are waiting for part two of the July core update to fix anything, if it hasn’t happened by now, it’s not going to. Not a lot to say on that but good to know that it’s done. There are still other updates happening. You know, your daily updates that go on multiple times a day throughout the year and of course, the page experience update, which will be running until, I believe, around the end of August before that’s expected to be done.

Ross: This is the summer of updates.

Scott: It really is, and who knows, maybe there will be an August core update and that’s great. You just never know.

Ross: Got to keep us on our toes. It’s pretty impressive how many people got affected: Amazon, Airbnb, FedEx, Delta Airlines, UPS, USAA, Home Depot, HBO Max, Costco, and the list just goes on. Insane, wow, wouldn’t want to be them. Okay, moving on.

Scott: Well, luckily, we were still in bed when that happened and so we didn’t even notice.

Ross: Yeah, there you go. Okay, so Google will pass permanent signals with a redirect after a year. This is a note from Gary Illyes, I believe. He’s such a snarky dude. Here, this is a quote you posted here, “hands up if you asked us recently for how long you should keep redirects in place! I have a concrete answer now: at least 1 year (but try keeping them indefinitely if you can for your users)”

Scott: Is that really concrete?

Ross: Yeah, I don’t know. You’ve got some notes out. Go for it.

Scott: Oh, yeah. A few things to note there. Technically, this could happen in less than a year. So, it might happen sooner but it’s best to leave your redirects up for at least that year to make sure Google will assign those redirects from your original URL to your destination, and it will be permanent. Even if you pull the redirect in the future, any value that is being sent across, will exist afterwards. They do note that, was it Gary, that noted? Yeah, keep it indefinitely for your users, if you can, because just because you fix the redirect doesn’t mean you’re not going to break things for your users by removing it. Google will still love you but my rule of thumb is to leave these types of redirects up forever, really. If it’s reasonably easy to do so.

Ross: If you’re talking huge sites, you can’t do that. If you’re really worried about it, let’s say, it’s a really key or cornerstone content page of your site, or was, and it’s being moved, then you’re going to have to do some legwork. Go around and hit the places that you’re getting the most traffic from and tell them to update their link. You do run the risk of losing the page or the link so that’s got to be considered but it’s certainly worthwhile if you’re going to remove that redirect, but I wouldn’t.

Scott: No, just a suggestion.

Ross: It just makes no sense.

Scott: Like you said, if unless you’ve got a million page website, that’s all been redirected, just leave them up.

Ross: Yeah, I don’t even want to think what John Carcutt would have to go through with something like that, with all those newspapers.

Scott: That’s a lot of content.

Ross: As he’s mentioned before, some news content resurfaces again because something similar happens. If you don’t have those redirects in place, if something has been moved, which I’m sure they don’t do lightly, you lose out on the potential, like a huge, huge jump in traffic potentially. Redirects are not a small thing. It varies in terms of complexity and intensity based on the site you have, how big a readership you have, and just how detailed you are about tracking everything.

Okay, well, let’s take a quick break and when we come back we’re going to talk some local SEO news.

Welcome back to SEO 101 on WMR.fm. Hosted by myself, Ross Dunn, CEO of Stepforth Web Marketing and my company’s Senior SEO, Scott Van Achte.

In the local SEO realm, you posted something about Reviews on Google? I have not seen this so lead the way.

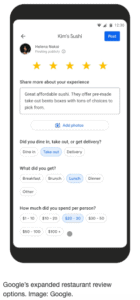

Scott: Yeah. Google has, or, is expanding the way you can review restaurants now.

Ross: Vegas.

Scott: It will be especially good. I’m finding a lot of times now, small restaurants, especially sushi, for some reason, I’m not sure what it is with sushi places. They don’t have websites, they’re just running off of Instagram or Facebook and it drives me bananas because you can’t find all the information you want. You can’t always see a menu. My wife hates it because she doesn’t even use Facebook. Sometimes you can’t even see any of their online presence. Maybe this will help with that in the reviews a little bit for those businesses. Just another place to get a bit more information about them. Currently, this is only available, in terms of leaving the reviews, for people on Android and iOS and for US-based restaurants only. I would expect to see it rolled out on a much wider scale relatively quickly. I can’t see why they would delay it for too long.

Ross: I think it’d be great too. It does add a lot of context if the restaurant owner, or whatever system they’re using to request reviews if they’re smart enough to have one in place, would be able to pre-set some of these like, “I want your review on takeout, review on dine-in.” Those are probably the best examples, and delivery. Just segment them a bit because people don’t necessarily do it themselves. It would be, I think, a really cool way too of having various reviews.

Scott: Absolutely. Even I’m wondering when it comes to embedding Google reviews on your website, and that sort of thing. If you’ve got your breakfast menu on one page, you can throw in the reviews that are specific to breakfast only on that page. You could really take advantage of this and maximize its value to try to convert people.

Ross: Now the sneaky bit here is, how can Google win from this? In just about everything they force us to do or request or a little feature they add is to help them, not us. From a smart perspective, if they get reviews for breakfast separately from lunch and dinner and the same for those, they could impose different reviews at different times of day based on when you’re looking at the reviews. It could switch based on the time of day.

Scott: Or even just the store criteria.

Ross: Yeah, and that would add more value and make it more interesting for people, who are actually doing their search, to use it. I think that would be pretty smart.

Scott: Even just like “best breakfast in Victoria” and then the places that have the best breakfast reviews specifically show up.

Ross: And best review for takeout. These days, those are absolutely critical delineations. Even though things are cooling down, at least in Canada, actually I guess in the States most places are open still but COVID is by far not gone, a lot of people still order out and they don’t sit in. So I would expect that that’s a major consideration. I know for me, for example, going to White Spot. If I get takeout there, food was fine but their packaging was awful.

Scott: Yeah

Ross: About 9 out of 10 times, they had forgotten something. It was killing me and there was always a little sticker on there “Inspected by Dave.” Where the hell is Dave?

Scott: Oh, Dave.

Ross: Yeah, Dave, dammit.

Scott: That makes me think of a feature they should probably have. As a reviewer, sometimes you want to review, maybe you have a really good experience with a restaurant one day and a bad one the next but it doesn’t necessarily mean that your five star review from yesterday goes down to one today. Maybe if you could add multiple reviews, like “here’s my breakfast review and here it’s separate from my dinner review”. Maybe breakfast review times, they’re great in the restaurant, the staff is good, but their dinner time staff is just trash.

Ross: That’s a good idea.

Scott: Even for delivery and takeout, like you said, if you’re getting delivery for White Spot and your order’s always wrong, like “hey, you know what, don’t get their delivery, it’s terrible. They always screw it up. But when you go in, the order’s right and they do a good job.” To be able to segment that would probably be really good from a reviewer perspective. Plus you get more points towards your local guide.

Ross: Yeah, I like that. Yes, exactly. Oh, man, I used to be on that bandwagon. I’ve just gotten lazy. I think there’s a lot to unpack here. It’s going to be very interesting to see how Google uses this information. I think we’re on the right track there, though.

Next up, I’d like to check in on what Joy Hawkins is up to. She’s kind of the maiden of local SEO. She knows what is going on, all about that and she lives and breathes it. On her local search forum, I noticed that there’s been some rumors. I hadn’t heard this but I was just reading it, that geotagging images was helping with rankings. Now, for those who don’t know what that means, you can add, essentially, the latitude and longitude of where that particular image was taken. There was a time where even I thought it was helping because this made sense, right? If you put the latitude and longitude, it’s gonna help Google do a number of things from tagging you in maps to knowing that a particular image was taken in a certain place. Seems really logical to me but what has been proven, again, is that geotagging images still has no impact on rankings. She has confirmed that in a recent test. She’s about to deliver, I think, a presentation on that. Either she already has or is about to. So very fresh information and it’s good to hear that we can just push off those ravings, because someone we respect has already tested it, it’s not actually helping.

Scott: Logistically and logically, it looks like it should work. Like you said, it seems totally logical but I wonder if it’s because it’s just too easy to game.

Ross: That’s probably it.

Scott: Like a Meta keyword tag. It’s obvious why they got rid of that. You could just do whatever you want in there. Maybe it’s for similar reasons.

Ross: I still think it wouldn’t hurt to have it. I mean, they could easily do correlation data. They could look at images that have all been taken in the same place and they have a similar geotag, it’s unlikely that was fake. Sure, you’ll get the Google bombers, people who are trying to get something ranking that shouldn’t be ranking but that’s still a very, very small test case. I mean, very, very rare. I really like it from the point of view of historical photographs, to be able to slide, use a slider and go back in time of the images taken in that area. I’m dying to see that. I believe, it’s already been built into Google Earth and certain aspects of that. I know it was somewhere and I’ve been waiting for it for, I think when I first started the podcast, so 12 years ago we were talking about this. How cool would that be? Just go in Street View and just scroll backwards and see things change.

Scott: That’s one thing. With Street View, you can definitely see previous passes from the car. If you could do that but with all these images from all over the web, not just the Street View car, whatever you call it.

Ross: Yeah, publicly-sourced images. They could use 3D images, like people using those 3D cameras. All the stuff’s online. I know it’s a massive job but compressing all this and making it all available in one specific area based on geotags, based on all this other information, that would be immense.

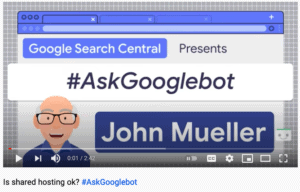

Anywho, let’s jump into some Mueller files. Google says there is no SEO advantage to dedicated hosting. I hadn’t thought of that for years. Good old John gets all the questions. So what’s this one?

Scott: This is something we’ve sort of been talking about off and on forever, dedicated hosting and unique IPs and that kind of stuff. In the latest installment of ‘Ask Googlebot’ on YouTube, which I should probably watch from time to time,

Even back in the day, we used to worry about what we were calling ‘bad neighborhood’. If you have a fairly wholesome business, and you are on the same server as a bunch of adult-oriented and gambling-oriented sites, and maybe a bunch of scammers and spammers, that could have been an issue back in the day, and now it isn’t. You don’t have to worry about it. John even replied to that, he said, “another concern we sometimes hear is that there might be other bad websites hosted on the same server. SEOs sometimes call this server a ‘server in a bad neighborhood’. This is not something to be worried about. In practice, most commonly used hosting providers watch out for this on their own. There’s generally a wide range of websites hosted on shared hosting, including some fantastic ones and some bad ones too. For Google this is fine and not problematic. We treat each website based on its own merits, not based on its virtual neighbors.” So that’s good. We’ve kind of known this for a while now but when we first started, when I first started, close to 20 years ago now, I’m fairly confident this was an issue. Bad neighborhoods definitely had an impact because we saw a lot of correlation there. These days, you don’t have to worry about it. Just go for speed, go for the faster server option. If it’s shared, great, if it’s dedicated, then you can afford it. Just go for the most appropriate for what you need.

Ross: Yeah, agreed. Even back in the day, we would do reverse DNS and look at all the different hosting, all the different sites hosted on that IP address and we’d insist that our clients have their own unique IP address. There was so much they had to do. We didn’t do that for everyone. We would mostly do that for clients who are having issues with rankings, that just unexpectedly, everything plummeted and there really is no obvious reason why. We would look at all of these little, little things that could be causing issues and just eliminate them one by one, and that was one of them. I’m glad to hear that that’s not an issue anymore. Certainly nothing we’ve looked at for years, at least a decade. I can’t think of anything we’ve talked about, or anytime we’ve talked about it since then.

Scott: No, I even did a bit of a test. I’d say maybe a year ago, with my own. I have a couple of websites that are kind of hobby sites of mine and I’ve always been on shared servers because they were cheap. I did a test for about a year where I paid the high rate of. I think, $1.95 a month and switched to a dedicated IP just to see, would it do anything? It did absolutely nothing, nothing at all. It’s probably not worth it, in basically all cases.

Ross: Okay, so another Mueller file is, how long does it take for Google to re-rank a rehabilitated website?

Scott: It does seem kind of long but like you said, if you’re going from 100,000 pages down to 20,000 pages, there’s so much that can go wrong there too. In that six-month window, if you don’t see a recovery within six months, maybe it’s on your own end, maybe you had some bad content that you merged with other content, or just got rid of that you thought was no good but was actually playing a significant role. I would say, six months doesn’t seem super long to me, if you’re looking at a scale of 100,000 to 20,000 pages. If you’re looking at a scale of content from say, 30 pages to 20, something that’s much smaller, I would say it’s probably in a few weeks. I would say the bigger the change, the longer the time you’d have to wait. So it might be realistic.

Ross: Well, we’ll certainly see. I bet there’s gonna be some follow-ups to this if people disagree. Again, we know that people who had gotten hit badly by some of the recent core updates, were in the position of waiting a year until their sites were reevaluated. Yes, they were still crawled. Yes, all that stuff but Google didn’t make that kind of a huge established change to visibility and rankings until they had the next core update so it kind of puts this into some question. Anyways, it’s promising, let’s hope that things have changed a little bit and they’re doing what they should be doing, which is reevaluating more often than just core updates.

With that said, on behalf of myself, Ross Dunn, CEO of Stepforth Web Marketing and my company’s senior SEO Scott Van Achte. Thank you for joining us today. Remember, we have a show notes newsletter, you can sign up for it at seo101radio.com. Don’t miss a single link and refresh your memory of a past show at any time. Have a great week, and remember to tune in to future episodes, which air every week on WMR.fm.

Scott: And thanks for listening, everybody.